我们:

万度云互动响应沉浸式VR全景漫游 互动游玩 创意定制

万度全景漫游致力于VR全景可读性提高和互动性增强研发。

万度全景漫游无缝融合视频、声音、图片、动画等各种媒体技术,模拟游客视角在不同场景中行走,独特的互动游玩给访问者带来全新的真实现场感和交互体验。

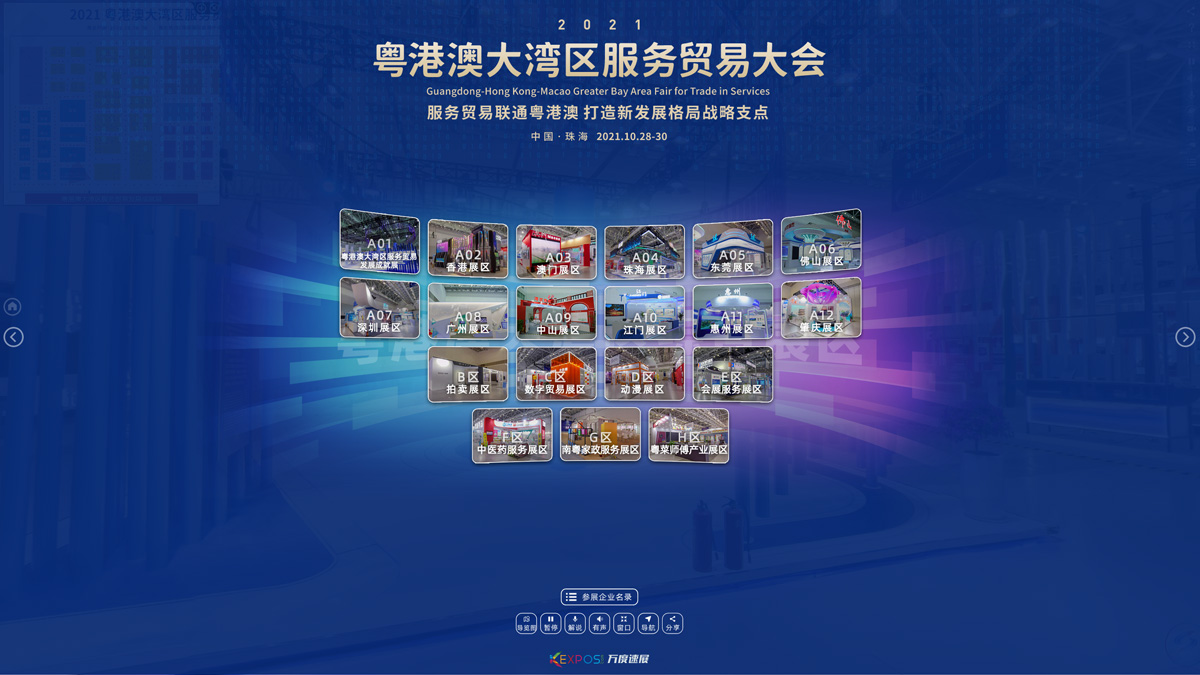

创立以来承办执行了粤港澳大湾区服务贸易大会线上数字平台、珠海国际设计周的线上展览、西部主城区航拍虚拟飞行、互动游玩野狸岛、南粤香山古驿道数字文创、斗门博物馆线上展馆、非物质文化遗产数字展厅……

万度全景漫游拥有自主研发的底层技术,立足于专业定制开发,从与客户沟通到制作上线每一环节都严苛要求,每一细节均可根据据品牌形象和客户需求定制。

采用响应式多分辨率设计,无论是苹果、安卓、Windows都能完美运行,一次制作即可满足微信推送、网站交互、大屏演示、触屏互动、VR体验等多种需求。

万度全景漫游能通过VR眼镜、体感、手势、遥控器、模拟设备等互动游玩。多种玩法,更多应用,可通过与硬件结合部署到规划展厅、活动现场等各种场所。

万度全景VR多媒体数字展厅 该动的都动起来

花费巨资精心打造的多媒体数字展厅,上线怎么能不动?

采用万度全景漫游技术制作的 "春暖花开——珠海城市设计40年" 专题展将大量的视频无缝嵌入,精准控制,语音解说自动同步,同一场景多视频无干扰自动播放,用数字技术在互联网真实发还原现代大型的多媒体数字展厅,是多媒体数字展厅上线借助互联网传播的积极探索。

展厅中每一个视频都能点击弹出完整播放,每一块展板都可以点击放大查看,增强了内容的可读性和思想的有效传播。

多媒体数字展厅用全景VR制作成线上数字展厅方案

媒体融合 无缝嵌入

万度数字多媒体线上展厅采用无缝视频植入,精准设置视场、音场范围自动播放,同一场景不同角度植入不同视频,语音,音效,让该动的都能动起来,有声音的都响起来,营造更加真实,震撼的现场体验。

配合程序开发,互动投影线上展厅同样能互动。

结合体感、控制器、场景模拟设备等硬件设施,能把数字展厅快速部署到不同的学校、场馆、社区、活动现场,数字分馆达到更广泛的传播效果。

创意文旅 互动游玩

我们将整个海岛——珠海野狸岛用全景VR形式搬到了互联网上,

真实的场景模拟,看白鹭齐飞,听惊涛拍岸,虫鸣鸟叫……

还可以选择游玩线路,游戏和自游浏览模式切换,隔着屏幕都能感受浪漫之城的魅力。

旅游景区公园景点全景VR创意制作方案

寻宝问答 创意传播

万度全景漫游模拟用户视角无缝采集拍摄,模拟音效、植入同步讲解、视频、图文信息、矢量动画,营造更加真实的情景体验,结合景区特点设计开发用户界面和交互形式。

提供自游和游戏两种模式操控,路线选择、寻宝积分,场景中找答案等互动形式,边看边玩,让人印象更为深刻。

结合万度全景航拍开场带来更为震撼的视觉感受。

结合体感、场景模拟等硬件设备,能部署到企业或城市规划展厅,活动现场。

信息平台 精准控制

我们承接的2022粤港澳大湾区服务贸易大会线上平台将26000平方的展馆完整搬到线上,将已搭建好的展览现场在“云上”一一还原。

共采用全景拍摄VR制作场景11530处,植入421条采访和宣传视频,119处语音解说,651处展板图文放大、200+企业介绍和名片……

数字展览让这届服贸会突破时间、空间的限制,继续发挥其展示粤港澳大湾区服务贸易最新成果,共建新时代服务贸易创新发展平台、交流合作平台的作用。

把大型展览搬到互联网

全景VR 线上看展

万度全景漫游让VR线上展览变成综合的信息平台,成为线下展览的有效补充和突破时空的延伸。

精准展示和控制,模拟用户看展完整采集兴趣点,在场景中植入视频、图文介绍、语音讲解、企业名片、产品链接等,全方位展示参展企业的风采和传递信息,同时为访客和展商提供更多有价值的内容,促进更多线下交易。

借助技术优势和AI处理,万度速展团队能在短时间将上万平方的大型展会搬到线上。

高空俯瞰 模拟飞行

通过合理布点使用无人机搭载高清摄像机采集拍摄,让整个西部主城区尽收眼底,

实景拍摄相对3D建模更真实、快速、低成本,虚拟飞行带来更好的操作体验,

能隐藏的动态标签更明晰,炫光、动画让场景更逼真……

城市规划与项目工程全景VR展示

实景操控 展厅互动

万度云VR全景航拍使用无人机搭载高清摄像机采集数据处理,让用户从高空俯瞰,纵览全局。

VR实景航拍相对以往的3D建模更加真实、制作快速、成本低,结合体感、模拟窗等硬件设备,能部署到企业或城市规划展厅、展会和各类活动现场。

万度全景漫游技术,融合多种媒体和互动信息,带来更好的感官体验,更好地展示企业、园区、城市的规模和建设成就。

3D文物 图文放大

采用万度全景漫游技术制作的党建展厅、文物精品展馆具有无缝嵌入视频,同步解说,图文放大等特点。

结合万度3D扫描技术能将文物或产品扫描后嵌入场景,让用户能够仔细观看。

您看到的案例包括我们制作的斗门博物馆整馆和斗门革命斗争史、斗门历史陈列展、馆藏文物精品展厅。

万度全景VR数字博物馆线上展厅

增强交互 高清好读

视频无缝嵌入、3D文物弹出、同步语音解说、图文点击都能放大仔细阅读……

让数字博物馆情景体验更互动,更具可读性,真正成为突破时空、全天候的线上分馆,让老百姓随时随地都能走进博物馆,更好地学习和了解传统文化。

结合万度全景漫游体感、控制器、场景模拟设备等硬件设施,能把数字展厅快速部署到不同的学校、场馆、社区、活动现场,达到更好、更广的传播效果。

区位周边 小区 样板房

卫星全景VR分析房地产楼盘所在位置的区位价值,

航拍全景VR俯瞰整个房地产楼盘,标注周边配套市政、学校、购物、公园……

模拟用户视角漫步小区,虚拟环境声效,寻宝互动,边玩边看……

样板房精致拍摄,交互展示,还能分享给家人朋友,促进地产销售。

万度房地产全景VR营销系统

情景互动 灵活定制

卫星、航拍、地拍VR全景组合,把房地产行业客户关注的小区楼盘区位地段、周边配套、小区环境、样板房全部打包推送到客户的面前,

配合语音解说、视频植入、互动游戏,让用户走进现场直观感受楼盘价值,与亲朋分享,促进销售。

结合大屏、VR眼镜、体感、模拟控制器等硬件设备,随时把整个楼盘带到展会、活动现场,让氛围嗨起来。

万度全景漫游拥有自主研发的底层核心技术,将根据您的品牌视觉形象和营销需求定制外观界面、增添功能、情景配乐、互动问答、触发交互…… 一切因需而变,彻底摆脱各种全景平台的简单、模式化束缚,更好的把您的品牌传达给用户。

万度云视角 全景VR案例 资讯 动态更新

-

从数据看粤港澳大湾区服务贸易大会线上平台的信息化和精准化

首届粤港澳大湾区服务贸易大会原定于10月28-30日在珠海会展中心举行,因疫情等原因延期改为线上,承蒙信任,我司承接了本次线上平台的工作,万度速展团队和华发团队紧密合作,将已搭建好的展览现场在“云上”

-

走进斗门区非物质文化遗产VR数字展厅, 听疍家民歌,看斗门非遗

非物质文化遗产是中华民族源远流长的历史中留下的灿烂瑰宝,是凝聚先辈智慧与卓越价值的精神财富。中华文化延续着我们国家和民族的精神血脉,既需要薪火相传、代代守护,也需要与时俱进、推陈出新。白藤山湿地公园VR全景数字导览

白藤山生态修复湿地公园位于湖心路口金湾立交西侧,白藤山下,占地10万平方米,原本是一个废弃的采石场,经过精心规划设计,结合自然的山体和湖泊,融合海棉城市理念,修复后摇身一变成为了一处集湿地保护、生态修复、文化展示、科普宣教、休闲观光于一体的大型湿地公园。

美翻了!珠海新情侣路,带你去打卡!

由珠海金湾区着力打造的西区情侣路(机场东路)范围为泥湾门大桥至海滩路口滨水段,直接对标上海外滩、深圳湾,原珠海情侣路,以打造世界级滨海生态新区公共空间为目标,全程海岸线长约16公里,分为乐活公园、运动活力、生态体验、生态科普、城市门户五大区段,第一期建设的乐活公园、运动活力、生态体验三段现已基本完工,正值春游踏青好时节,有时间赶紧去走一走。

响应式设计 自适应终端

手机

平板 pad

笔记本

电脑

VR眼镜

智能电视

触摸屏

LED大屏

投影

特色功能 创新求异

精准定位

游戏模式

无缝视频

互动投影

虚拟飞行

全景比对

3D展示

矢量动画

图文放大

同步解说

寻宝积分

互动问答

专业VR

VR同屏

自动触发

精准分享

模拟音效

灵活定制

按需开发,更多功能,因您而生。

更多玩法 更多应用

体感

脚控

VR一体机

手势

声控

遥控